The field of brain-computer interfaces (BCIs) has witnessed remarkable advancements in recent years, particularly in the domain of thought classification speed. Researchers and engineers are pushing the boundaries of what's possible, enabling faster and more accurate interpretation of neural signals. This progress holds immense potential for applications ranging from medical rehabilitation to augmented communication systems.

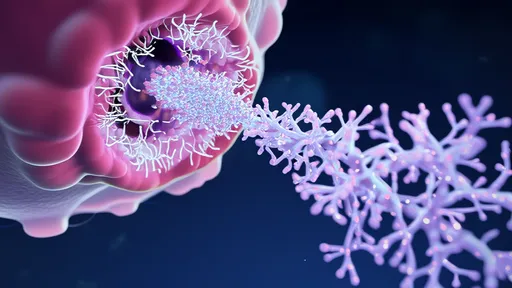

At the core of this technological revolution lies the ability to decode and classify neural patterns with unprecedented speed. Modern BCIs can now distinguish between different mental commands or intentions in near real-time, a feat that seemed improbable just a decade ago. The implications for individuals with motor impairments are particularly profound, as these systems offer new avenues for interaction with the external world.

Neural signal processing has undergone significant optimization to achieve these rapid classification rates. Advanced machine learning algorithms, particularly deep neural networks, have demonstrated exceptional capability in parsing the complex patterns of brain activity. These systems can identify subtle variations in neural signatures that correspond to distinct thoughts or commands, doing so with both speed and precision.

The temporal resolution of modern BCIs represents a critical factor in their effectiveness. Latency reduction between thought generation and system response has been a primary focus of recent research. Some experimental systems now achieve classification times measured in milliseconds, approaching the natural speed of human neuromuscular responses. This near-instantaneous translation of intention to action creates more intuitive and seamless human-machine interaction.

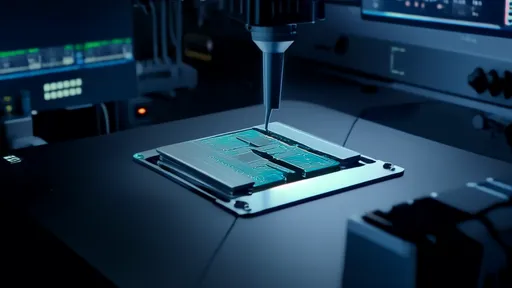

Electrode technology has played a pivotal role in enhancing classification speed. The development of high-density microelectrode arrays allows for more detailed sampling of neural activity across broader cortical regions. This increased spatial resolution provides classification algorithms with richer data inputs, enabling faster and more reliable pattern recognition. Simultaneously, novel materials and manufacturing techniques have improved signal-to-noise ratios, further boosting processing efficiency.

Signal processing pipelines have become increasingly sophisticated to handle the demands of rapid classification. Adaptive filtering techniques now effectively separate neural signals from various sources of interference, while dimensionality reduction methods help streamline the computational workload. These optimizations allow classification to occur with minimal delay, even when dealing with the complex, high-dimensional data characteristic of neural recordings.

The integration of edge computing into BCI systems has significantly contributed to reduced latency. By performing signal processing and classification locally on dedicated hardware rather than relying on cloud-based solutions, these systems minimize transmission delays. This approach not only speeds up response times but also enhances privacy and reliability, as sensitive neural data doesn't need to leave the user's immediate environment.

Training protocols for both users and machine learning models have evolved to support faster classification. Personalized calibration procedures now efficiently capture individual neural signatures, while transfer learning techniques enable new users to benefit from previously trained models. This dual optimization reduces the time required for system setup and improves classification accuracy from the outset.

Hybrid BCI systems that combine multiple signal acquisition modalities have shown particular promise for rapid classification. By integrating data from electroencephalography (EEG), functional near-infrared spectroscopy (fNIRS), and other sensing technologies, these systems can leverage complementary information to make faster and more robust classifications. The redundancy created by multiple data streams also enhances system reliability.

Real-world applications of high-speed thought classification are already emerging in clinical settings. Prosthetic devices controlled by BCIs now offer near-natural movement speeds, while communication systems for locked-in patients enable more fluid expression. These applications demonstrate how advances in classification speed directly translate to improved quality of life for users.

The challenge of maintaining accuracy while increasing speed remains an active area of investigation. Researchers are developing novel classification architectures that prioritize both dimensions simultaneously. Techniques like parallel processing of neural features and hierarchical classification schemes show particular promise for achieving this balance.

As classification speeds approach the limits of biological neural processing, new questions emerge about the nature of human-machine symbiosis. The potential for BCIs to operate at speeds comparable to or exceeding natural neuromuscular pathways raises intriguing possibilities for enhanced human capabilities. This frontier represents both a technological challenge and an opportunity to redefine human potential.

The future trajectory of BCI classification speed points toward even more remarkable achievements. With continued advancements in materials science, machine learning, and neuroscience, the gap between thought and action in human-machine systems may eventually become imperceptible. This progress promises to fundamentally transform how humans interact with technology and with each other.

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025

By /Aug 15, 2025